High-Fidelity AI License Plate Recognition System

Technologies

The Challenge

Standard open-source OCR libraries require near-perfect image conditions (lighting, angle, and clarity) to function. The challenge was to build a system that could handle "in-the-wild" scenarios—fog, rain, night shots, damaged plates, and extreme skewing. Additionally, the system needed to filter out false positives (such as text on billboards or street signs) which often confuse standard pre-trained models. The goal was to move beyond simple API wrappers and engineer a solution that understood the deep learning lifecycle from training to deployment.

Our Solution

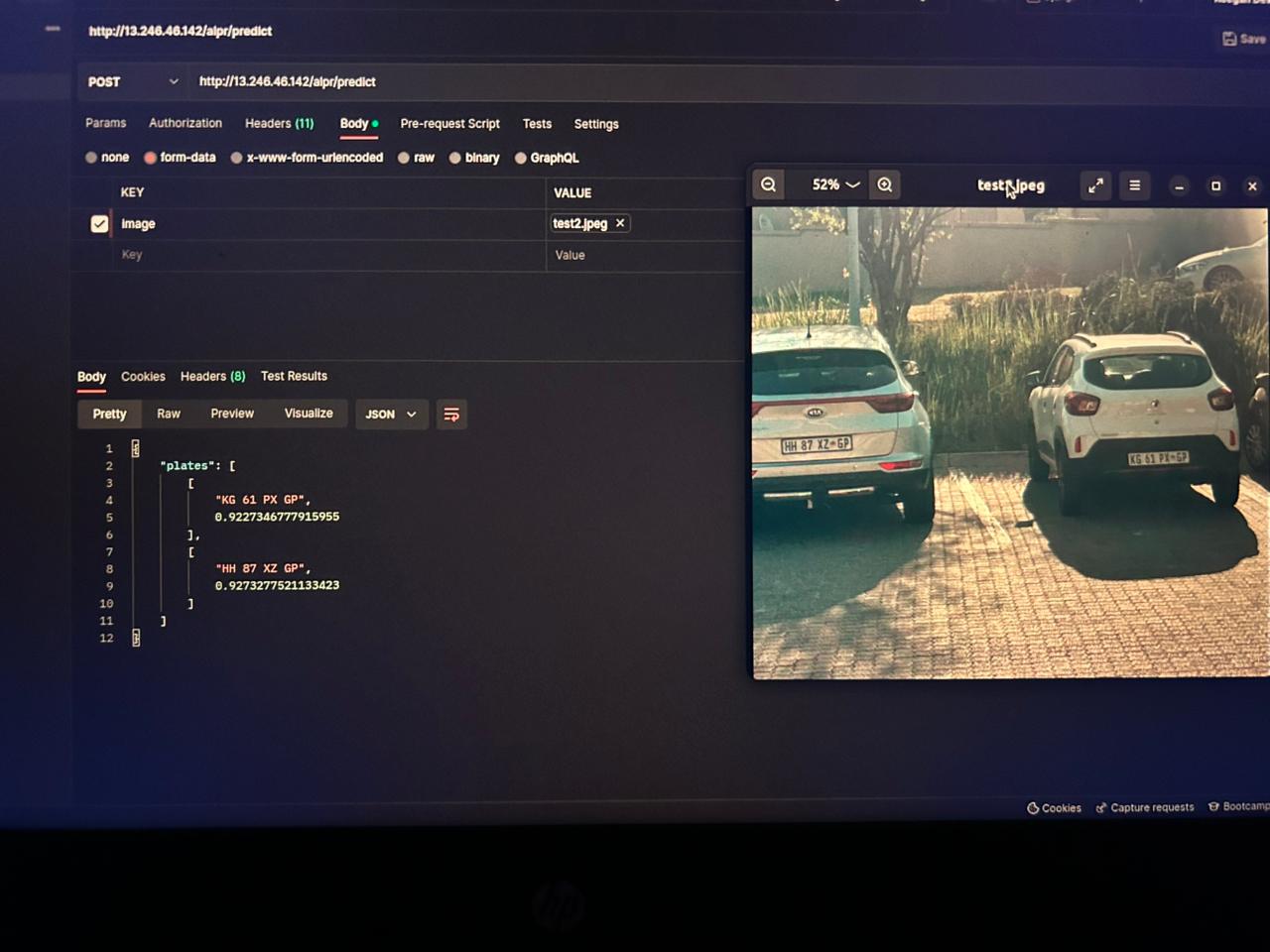

I architected an end-to-end custom pipeline that processes raw images from any source and returns structured data via a dashboard. The solution utilized a multi-stage computer vision approach: - **Scene Analysis:** Pre-processing to identify vehicles and isolate the Region of Interest (ROI), filtering out background noise like billboards. - **Object Detection:** Implemented a fine-tuned Detectron2 model specifically trained on license plate outlines to handle occlusion and damage. - **Image Rectification:** developed Python scripts to mathematically "straighten" and de-skew angled plates before reading. - **Custom OCR Engine:** Fine-tuned PaddleOCR on a dataset of pre-processed, real-world plates to recognize text in low-contrast environments. - **Full-Stack Dashboard:** Built a user-friendly interface using Vue.js and Vuetify (frontend) and PHP (backend) to log detections and manage the database.

Results & Impact

The project resulted in a fully functional, hardware-agnostic LPR system. Unlike commercial systems tied to specific cameras, this solution works with any image source (standard webcams, mobile phones, high-end security cams). The system achieved 100% accuracy in optimal conditions and sustained 80% accuracy in poor visibility environments (fog/night), significantly outperforming standard libraries. The 6-month initiative successfully bridged the gap between theoretical AI study and practical, production-grade deployment.